Beyond Air: Meeting the Extreme Heat Challenge

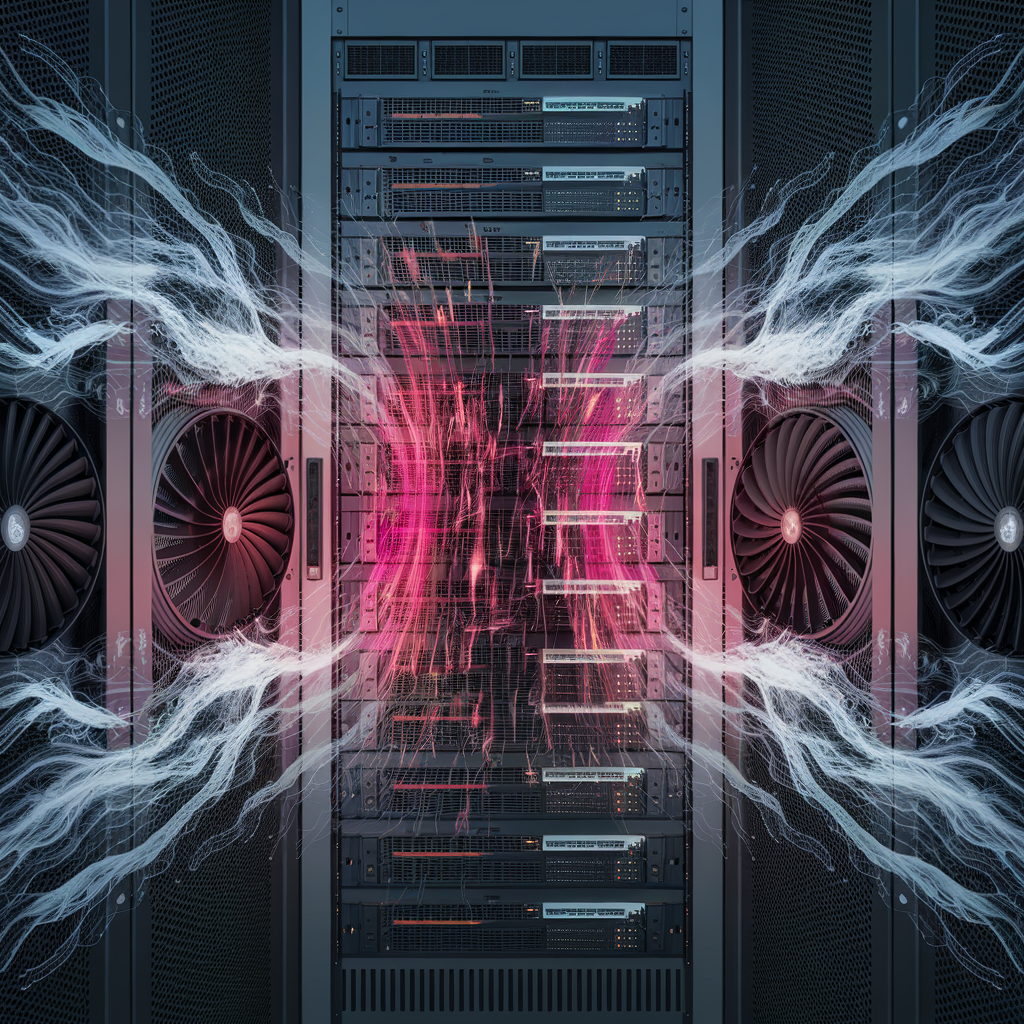

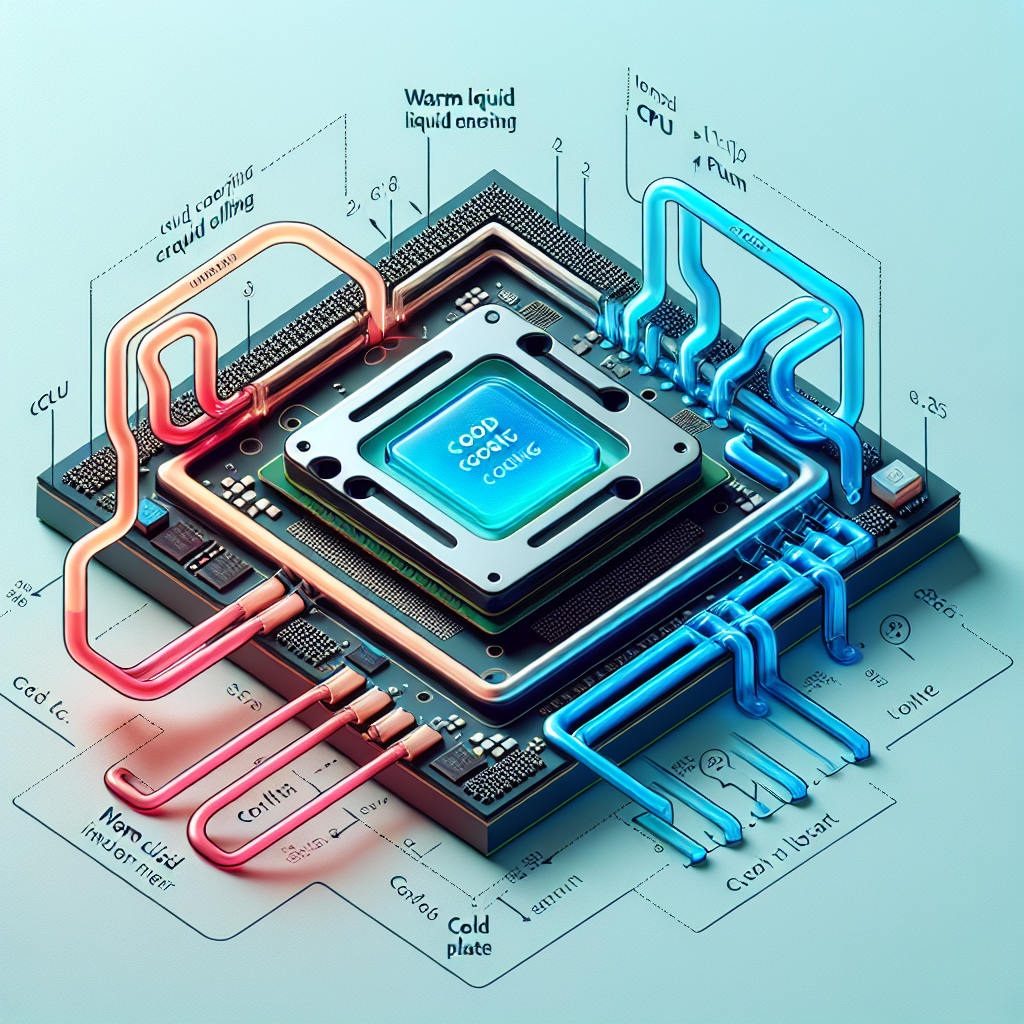

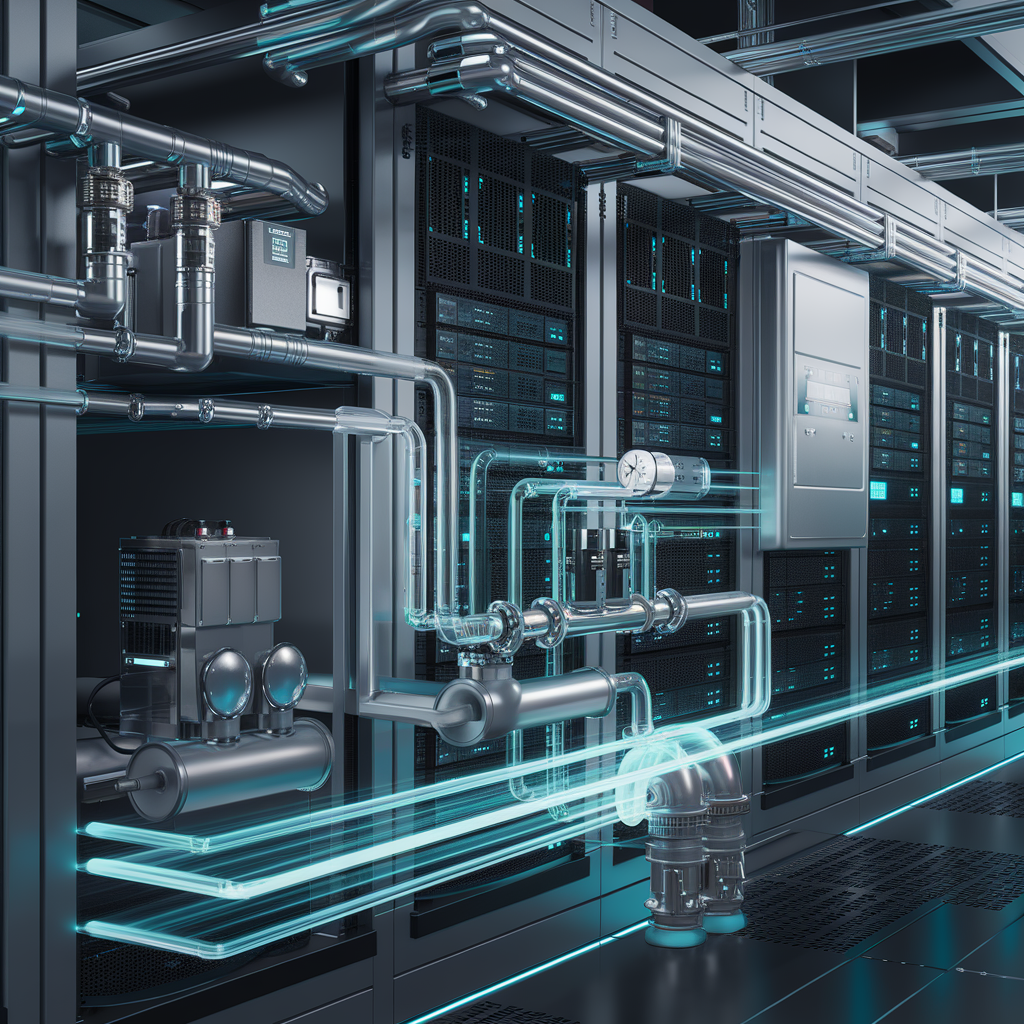

Our exploration of datacenter cooling has covered the fundamental challenges, traditional systems, and the sophisticated air/water strategies employed by hyperscalers. However, the unprecedented power density of the latest AI computing platforms is pushing these traditional methods to their absolute limits. This final article in our initial series examines why air cooling is becoming insufficient and introduces the technologies poised to define the next era of datacenter cooling: Liquid Cooling.

The announcement of systems like the NVIDIA GB200, requiring 120kW per rack and relying *exclusively* on liquid cooling, signals a fundamental shift in datacenter design. Understanding this transition is crucial for anyone planning or operating future-ready infrastructure.

The era of air-dominant cooling is yielding to liquid.