The AI Heat Crisis: Why Cooling is Datacenter Critical

Introduction: Powering the Future, Managing the Heat

Datacenters are the backbone of modern technology, supporting everything from global communications to the complex computations of Artificial Intelligence. The explosive growth of AI, particularly Generative AI, is dramatically increasing the demand for processing power, leading to an unprecedented rise in energy consumption and, consequently, heat generation within these facilities.

This series explores the critical infrastructure of datacenters. Having previously discussed the electrical systems grappling with this new power demand, we now turn to cooling—the essential countermeasure against the thermal energy produced. Cooling is not just about comfort; it's a fundamental engineering challenge, crucial for performance, reliability, and economic viability in the age of high-density computing.

Generative AI pushes hardware to new thermal limits.

The Direct Link: Electricity and Heat

The fundamental physics are simple: electrical power used by IT equipment is converted almost entirely into heat. One kilowatt of electricity powering a server generates roughly one kilowatt of heat that must be removed. This thermal output is characterized by the **Thermal Design Power (TDP)** of components like CPUs and GPUs.

For years, server rack densities were relatively low (5-10kW). Hyperscale computing increased the *scale* of operations, but the *density* per rack grew slowly. The AI revolution changes this. High-performance AI accelerators are dramatically increasing the power consumption, and therefore the heat output, of individual servers and racks. Racks that previously drew 15-20kW are now housing systems like the NVIDIA GB200 NVL72, requiring 120kW, cooled *exclusively* by liquid. Future chips are projected to exceed 1500W TDP.

Maintaining precise operating temperatures (typically 5°C to 30°C for sensitive hardware) is vital. Exceeding these limits can significantly shorten the lifespan of expensive IT equipment, leading to massive replacement costs and unplanned downtime.

Every watt consumed by IT becomes heat that must be dissipated.

Measuring Efficiency: Beyond IT Power

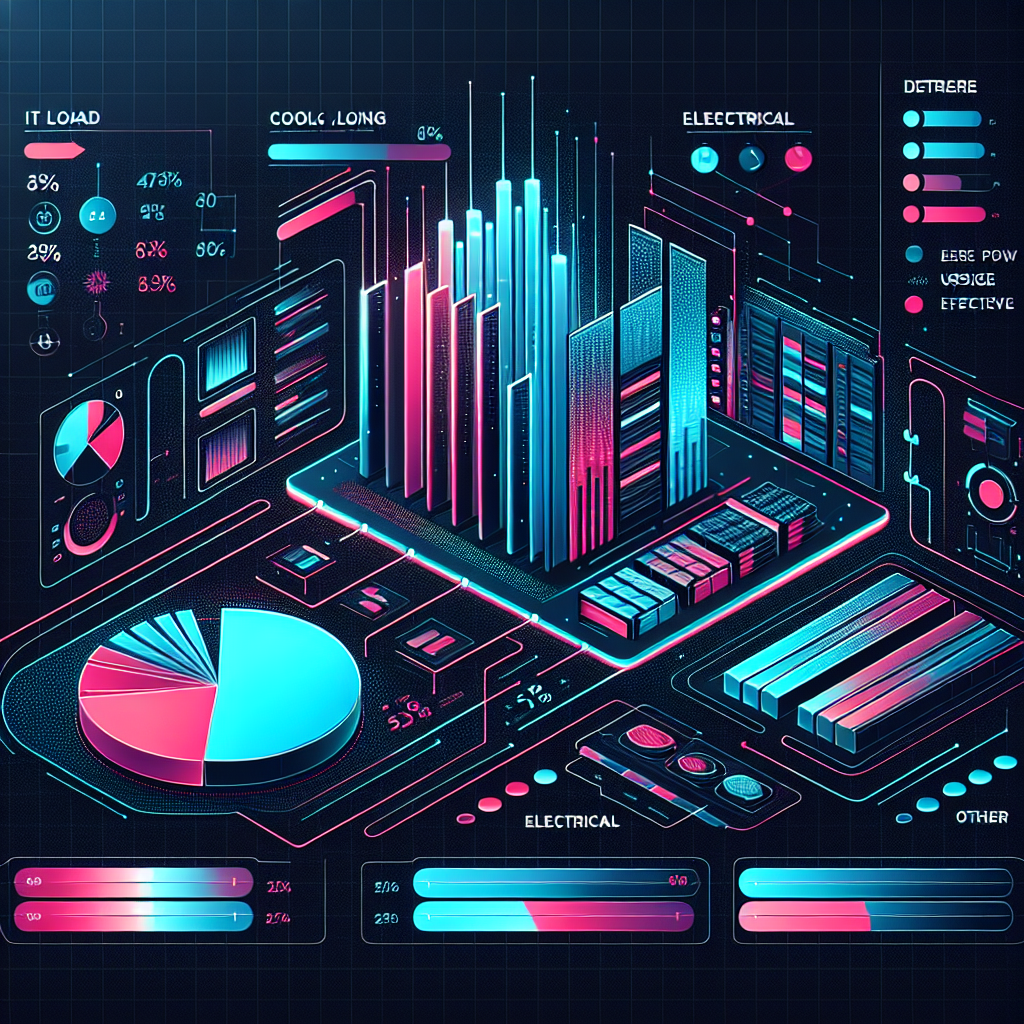

Evaluating datacenter efficiency requires looking beyond just the IT load. The primary metric is **Power Usage Effectiveness (PUE)**: Total Facility Power divided by IT Equipment Power. A lower PUE indicates less energy overhead.

- PUE 1.0 = Perfect efficiency (impossible in reality).

- PUE 1.5 = 0.5 watts of infrastructure power for every 1 watt of IT power.

While the industry average PUE hovers around 1.6 (often based on surveys excluding large hyperscalers), leading hyperscale operators consistently achieve much lower PUEs, often between 1.1 and 1.2. They achieve this through sophisticated designs, optimized operations, and leveraging environmental conditions (which we'll explore in Part 3).

Cooling systems typically account for the largest portion (60-80%) of non-IT energy consumption. Reducing cooling power is a primary goal for improving PUE and cutting operating expenses.

Approximate non-IT energy breakdown:

- >_ Cooling Systems: 60-80%

- >_ Electrical Systems (UPS, Distribution): 15-30%

- >_ Other (Lighting, Admin): 5-20%

It's worth noting that PUE has limitations. Metrics like server fan power are usually counted as IT load. A liquid-cooled server might reduce total power but could paradoxically *increase* PUE if its fans use much less power than an air-cooled counterpart, changing the PUE denominator.

Optimizing cooling is the biggest lever for PUE improvement.

Cooling: The Critical Design Investment

Beyond energy costs, cooling infrastructure represents the second-largest capital expenditure (CapEx) in datacenter development, after the electrical system. Designing effective cooling requires significant upfront investment and poses substantial technical and financial risks.

Hyperscale projects often exceed a billion dollars in CapEx. The speed of technological advancement, especially the sudden jump in density driven by AI, means infrastructure designed even a few years ago may struggle or be unable to support the latest hardware efficiently. The risk of rapid obsolescence is high if cooling solutions aren't flexible and forward-thinking.

The industry faces a potential bottleneck: demand for high-density cooling (like liquid cooling) is accelerating faster than the construction of facilities equipped to handle it. This could force operators into deploying less optimal, "bridge" solutions, impacting efficiency and cost-effectiveness. Cooling is no longer a secondary consideration; it's at the forefront of datacenter design and investment strategy.

Cooling is a multi-billion dollar challenge in building future-ready datacenters.